Summary / Key Takeaways:

- Videos now serve as authoritative answers in AI search results—without accurate transcripts, your content is invisible to LLMs

- YouTube is the #1 most-cited domain in Google Gemini, making it essential for AI search visibility and brand authority

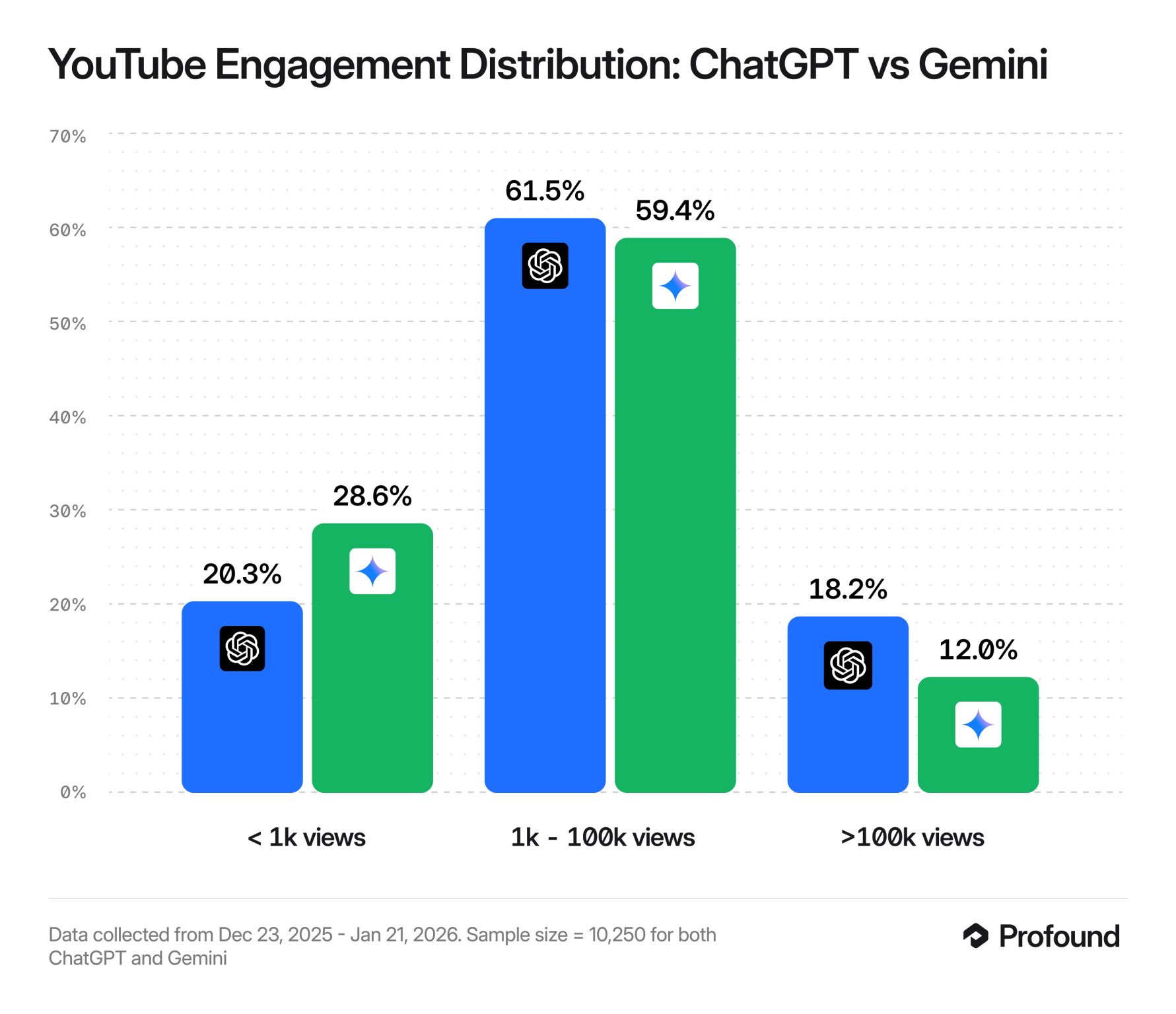

- Different LLMs require different strategies: Gemini cites videos with fewer views while ChatGPT prioritizes higher view counts

- "Freshness" matters—content updated within the last 13 weeks has significantly higher citation odds in AI search results

- Answer Engine Optimization (AEO) focuses on creating concise, scannable snippets that LLMs can easily cite as authoritative sources

Views and engagement used to be everything. But in 20256, there's a new metric that matters more: citations.

When someone asks ChatGPT or Google Gemini a question, which videos get cited as authoritative answers? It's not always the ones with millions of views. In VEED's recent webinar on video discoverability, Georgie Kemp (SEO Lead at VEED), and Nick Lafferty (Head of Growth Marketing at Profound revealed how AI search is fundamentally changing video strategy.

The session, "Video Discoverability in 2026," delivered data-backed insights on which videos get cited in LLMs, why different AI platforms require different optimization approaches, and the exact workflow to make one video discoverable everywhere.

This recap breaks down everything you need to know about optimizing videos for AI search engines—from transcript optimization to citation tracking.

Why video citations matter more than views

The shift from engagement to authority

The session opened with a fundamental truth about the changing video landscape: "Video isn't just for views engagement anymore. Video means your brand is listed, cited, wherever people are searching—specifically across AI tools like ChatGPT and Gemini."

When users ask AI search engines questions, the platforms cite sources to back up their answers. Getting your video cited means:

- Your brand appears as an authoritative source

- Users click through to your content from AI platforms

- You build credibility in your niche

- You capture traffic from AI-powered search (the fastest-growing search channel)

Traditional metrics like views and watch time still matter, but citation visibility represents a new frontier for brand discovery.

The transcript invisibility problem

Georgie Kemp highlighted the most critical optimization factor: "If you don't have a transcript, you could potentially be invisible to LLMs as a whole."

LLMs don't watch videos. They read transcripts. Without accurate, complete transcripts, your video content simply doesn't exist in the AI search ecosystem—regardless of how valuable your content might be.

This creates a massive opportunity for brands willing to optimize properly while competitors remain invisible.

Why different LLMs require different video strategies

YouTube dominates Gemini citations

Profound's latest data reveals stark differences in how AI platforms cite video content. Nick shared: "YouTube gets cited very often in AI search. It's the number one most-cited domain in Gemini versus being the 90th most cited in ChatGPT."

This disparity means your YouTube optimization strategy needs platform-specific adjustments:

For Google Gemini:

- Deeper engagement metadata (watch time, completion rate)

- Videos with fewer than 1,000 views still get cited frequently

- Strong topical authority signals through tags and descriptions

- Recent content (updated within 13 weeks) performs better

For ChatGPT:

- Higher view counts correlate with citations

- Broader reach and social proof matter more

- Cross-platform presence increases citation likelihood

- Established authority domains get prioritized

The market shift you can't ignore

Recent SimilarWeb data reveals dramatic platform changes: "ChatGPT still dominates overall traffic, but Gemini has grown 49% in the past few months, while ChatGPT is declining by 22%."

Georgie emphasized the strategic implication: "You can't just optimize for one. ChatGPT has the users today. Gemini is where the growth is happening."

Brands optimizing exclusively for one platform risk missing significant opportunities as the AI search landscape evolves rapidly.

Answer Engine Optimization (AEO): the new SEO

From pages to snippets

Traditional SEO focused on ranking pages. AEO focuses on powering answer snippets. Nick explained the shift: "What I want to talk about are actionable tips and strategies … to increase visibility in AI search so your brand is the one that shows up in the answer."

The fundamental difference: LLMs don't read entire pages or watch complete videos. They scan for concise, relevant snippets that directly answer user queries.

This changes everything about how you structure video content and metadata.

What makes content cite-able

For AI platforms to cite your video, you need:

Direct answers: Clear, specific responses to common questions in your niche.

Scannable structure: Chapters, timestamps, and section breaks that help LLMs identify relevant segments.

Fresh content: Regular updates signal relevance and accuracy to AI algorithms.

Complete metadata: Titles, descriptions, tags, and transcripts that provide context without watching the video.

Schema markup: Structured data that helps AI platforms understand and categorize your content.

The anatomy of a cite-able video

Transcripts: your foundation for discoverability

Georgie emphasized the non-negotiable nature of transcripts: "Transcripts must be complete, accurate, and structured … because that's probably one of the best ways to help large language models understand your content."

Transcript best practices:

- Use an automatic subtiltle generator as a starting point, then manually review for accuracy

- Include proper punctuation and formatting for readability

- Add timestamps that correspond to topic changes

- Embed transcripts on your website alongside video embeds

- Update transcripts when you refresh video content

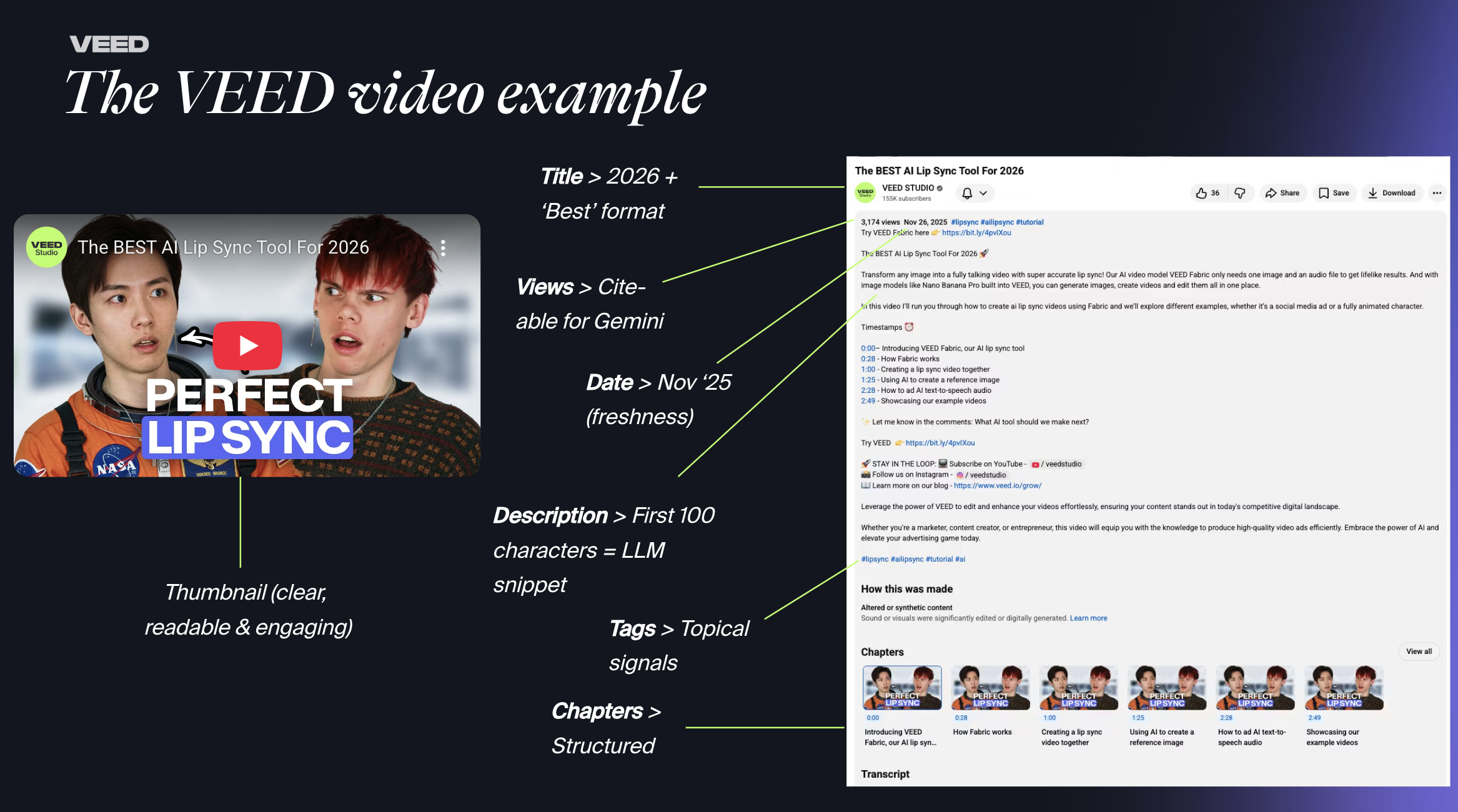

Titles that signal relevance

Long-tail, question-based titles perform better in AI search. Instead of "AI Video Tools," optimize for "AI Lip Sync Tool in 2025" or "How to Use AI for Video Translation."

These specific titles:

- Match actual user queries in AI search

- Signal exactly what your video covers

- Help LLMs understand topical relevance

- Reduce ambiguity about content value

Descriptions that work in 100 characters

AI platforms prioritize the first 100 characters of video descriptions. Georgie and Nick stressed front-loading critical information:

Weak description: "In this video, we'll be discussing various aspects of video marketing and how you can use them for your business..."

Strong description: "Learn 5 proven video marketing strategies that increase conversions by 34%. Includes templates, examples, and step-by-step workflows."

The second version immediately communicates value and specificity.

[Video clip placeholder]

Tags as topical authority signals

Georgie explained the strategic role of tags: "Tags act as topical authority signals. Additionally, chapters help LLMs understand and surface granular answers from your video."

Don't waste tags on overly broad terms. Use them to:

- Define your niche positioning

- Connect to related topics and queries

- Signal expertise in specific areas

- Help AI platforms categorize your content accurately

Chapters for granular discoverability

YouTube chapters (timestamps with descriptions) serve double duty:

- Help human viewers navigate long-form content

- Allow LLMs to cite specific segments rather than entire videos

A 20-minute video with clear chapters becomes multiple cite-able answers instead of one long, un-scannable source.

The freshness factor: why 13 weeks matters

Content recency dramatically impacts citation likelihood. Nick and Georgie highlighted that videos updated within the last 13 weeks receive significantly higher citation rates in AI search results.

This creates an optimization opportunity:

Without re-filming:

- Update video descriptions with current data

- Add new chapters or timestamps

- Refresh thumbnails to reflect current branding

- Update embedded transcripts with additional context

- Re-upload with revised metadata if necessary

Quick content updates: Small metadata changes signal freshness to AI algorithms without requiring new video production.

Learn more about optimising your content for AI citation in this deep-dive by the LLMrefs team.

The 20-minute workflow: one video, maximum discoverability

Step 1: Create with citations in mind (5 minutes planning)

Before filming, identify:

- The specific question your video answers

- Related queries users ask in your topic area

- Key terms and phrases for transcripts and descriptions

- Chapter structure for topic segmentation

Step 2: Optimize core metadata (5 minutes)

After publishing:

- Write a long-tail, question-based title

- Front-load your description with value in first 100 characters

- Add 5-10 specific, niche-relevant tags

- Create detailed chapters with timestamp descriptions

Step 3: Perfect your transcript (5 minutes)

- Review auto-generated captions for accuracy

- Correct industry terms, brand names, and technical language

- Add punctuation and formatting

- Embed transcript on your website with the video

Step 4: Cross-post strategically (5 minutes)

- Embed the YouTube video on your website or blog

- Share with context on LinkedIn, Twitter, or relevant platforms

- Include the video in newsletter content if applicable

- Link back to the full video from social clips or excerpts

This streamlined workflow maximizes citation potential without overwhelming your content calendar.

Tracking citations and measuring success

Using AI search tracking tools

Nick demonstrated Profound's citation tracking capabilities: "We send millions of prompts daily to AI platforms and organize citations into topics—for example, which videos are being cited and how often."

Citation tracking reveals:

- Which videos get cited across different AI platforms

- What queries trigger your video citations

- How often your brand appears versus competitors

- Which metadata optimizations correlate with citation increases

Key metrics to monitor in AI search

Beyond traditional video analytics, track:

Citation frequency: How often your videos appear in AI search results across platforms.

Citation context: What questions or queries trigger your video citations.

Platform distribution: Whether Gemini, ChatGPT, or other LLMs cite your content more frequently.

Competitor benchmarks: How your citation rate compares to others in your niche.

Testing and iteration

Launch videos with optimized metadata, monitor citation performance, and refine:

- Test different title formats (question-based vs. descriptive)

- Experiment with description lengths and structures

- Try various tag combinations for topical authority

- Update content freshness on different schedules

Performance data reveals what works for your specific niche and audience better than assumptions.

Beyond viral: relevance over reach

Georgie and Nick challenged the traditional video success model: "What we're seeing right now is that video is a big bet to get listed where search is heading, specifically across LLMs."

The new video strategy prioritizes:

Targeted answers over mass appeal: Create videos that definitively answer specific questions rather than trying to appeal to everyone.

Niche authority over viral moments: Build expertise in focused topic areas that AI platforms recognize and cite consistently.

Long-term citation value over short-term views: A video with 500 views that gets cited regularly provides more value than a viral video that never appears in AI search.

Practical video formats for AI search

Question-driven how-to's

Videos that directly answer "how to" queries perform exceptionally well in AI citations. Structure these with:

- Clear problem statement in the first 10 seconds

- Step-by-step process with chapter timestamps

- Visual demonstrations of each step

- Summary of key takeaways

"Best of" comparison lists

AI platforms frequently cite videos comparing tools, strategies, or approaches. Optimize these with:

- Specific criteria for evaluation

- Individual chapters for each item discussed

- Data or evidence supporting recommendations

- Clear use-case scenarios

Expert explanations of complex topics

Educational content that breaks down complicated subjects gets cited as authoritative sources. Include:

- Simple definitions of technical terms

- Real-world examples and analogies

- Visual aids that clarify concepts

- Timestamp navigation for specific sub-topics

Tools mentioned in the webinar

Citation tracking and analysis:

- Profound: Monitor which videos get cited across AI search platforms, track citation frequency, and analyze competitor performance

Video optimization:

- VEED: Create, edit, and optimize videos with automatic transcription, AI video tools, and platform-specific formatting

Content freshness:

- YouTube Studio: Update metadata, refresh thumbnails, and manage video content without re-uploading

Wrapping up: your AI search optimization action plan

Here's what to remember from the video discoverability webinar:

- Transcripts are non-negotiable: Without them, LLMs can't read your content—you're invisible to AI search regardless of video quality.

- Platform strategies differ: Optimize for both Gemini (engagement metadata, lower view counts) and ChatGPT (higher views, broader reach).

- Freshness wins citations: Update content every 13 weeks to maintain relevance and citation likelihood in AI search results.

- Track what matters: Monitor citations across platforms using tools like ProFound to measure real AI search visibility.

Next Step: Choose one existing video this week and optimize it for AI search—add accurate transcripts, update the description's first 100 characters, create detailed chapters, and add niche-specific tags.

📚 Resources from the webinar:

- Georgie's slides: Video optimization strategies for AI search

- Nick's slides: 2026 latest video citation data and tracking insights

- Full masterclass recording: Watch the complete session on-demand

%20(2).png)